Polish language e-learning system

objective

The objective of this project was to design, prototype, and test an e-learning system utilizing the principles of learner-centered design. I developed a set of testable learning objectives for the course, designed the course content, and evaluated how well the system helped people meet those intended objectives.

Context

This E-learning system was created for HCI520: Learner-Centered Design at DePaul University

METHODS & TOOLS:

Axure RP 9

role:

UI/UX Designer, researcher, course content creator

Course description

I began the project by outlining the course goals and deciding what the course would cover. The course length was limited to roughly a 30 minute course, including both the pre and post tests.

Scope:

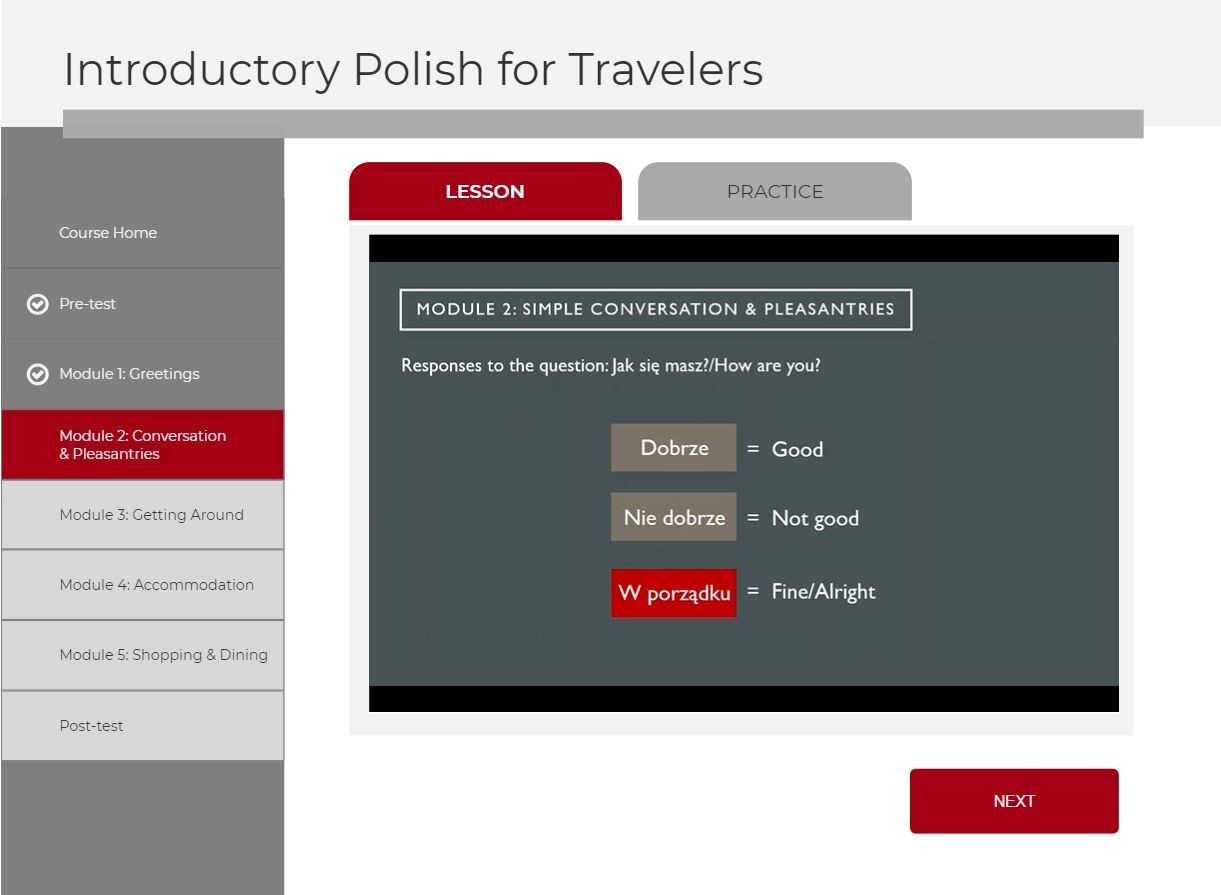

For my project, I created an e-learning system featuring an introductory Polish language lesson designed for travelers visiting Poland. The course covers vocabulary and phrases separated into the following modules:

• Basic Greetings

• Simple Conversation and Pleasantries

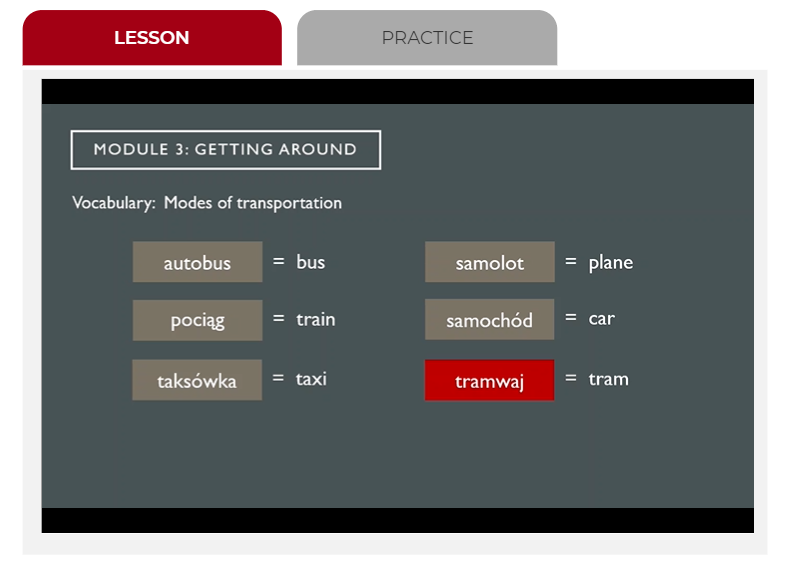

• Transportation and Getting Around

• Accommodation

• Shopping and Dining

Content Source:

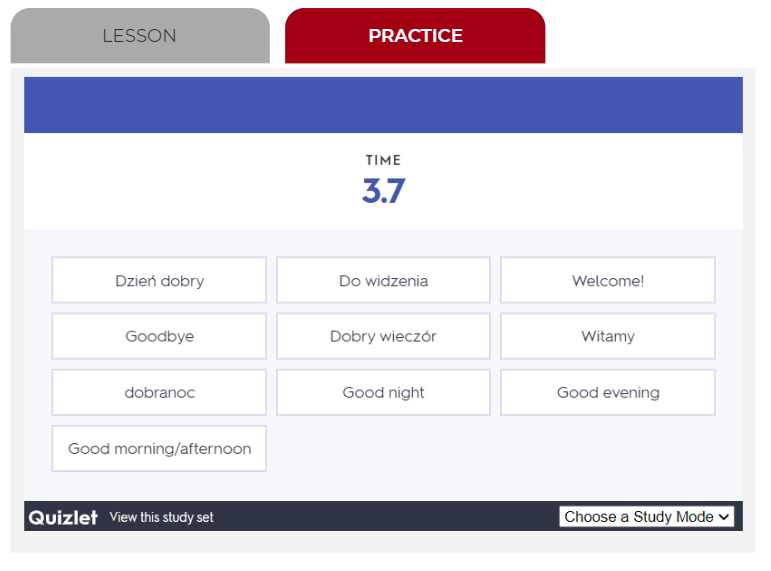

For this project, I relied on my own personal knowledge of the Polish language, as I am fluent in Polish. I used Quizlet to create a matching game for vocabulary practice.

Course Goals

After I narrowed the scope of the lesson, I created 5 specific, testable learning goals, which were measured by relevant questions on the pre and post tests.

By the end of the course students should be able to:

remember and use simple greetings and pleasantries in the correct context

match destination/transportation vocabulary and phrases with the correct context and translation

ask for directions to a destination (bathroom, museum, bus etc.) as well as understand some basic directional responses

ask the price of something and ask for an item they would like at a store, restaurant, or hotel

use and match learned travel phrases and vocabulary to specific contexts of use

Application of Learner-Centered Design principles

Contiguity Principle

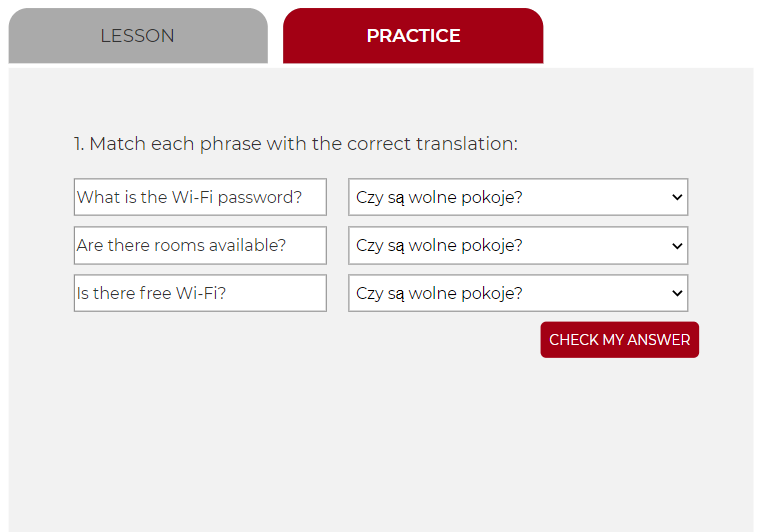

While I did not have images and text to be shown near each other, I made sure to apply the contiguity principle in my practice question feedback. I placed the feedback directly beneath each question, right near the “Check My Answer” button, so that learners would see it quickly and easily. Here is an example:

Modality Principle

Rather than presenting purely textual information, I presented the information in videos. The videos consisted of audio over slides of the vocabulary and phrases in textual form:

Redundancy

Since this is foreign language learning, I purposefully violated the redundancy principle and showed the information in textual form while simultaneously saying the same words utilizing audio. I made sure to highlight the words as I went along to aid learners in following along:

Segmenting

I broke up the course into 5 modules to segment the information into manageable pieces:

Practice

I utilized a lot of varied practice in the system. For some modules, I included a Quizlet matching game for vocabulary, and for other modules, I included other custom practice questions, with feedback given when the learner clicked “Check My Answer”:

Learner Control

Since my system is designed for beginners, I allowed very little learner control. It was mostly system controlled, with the “Next” buttons disabled until the learner watched the lesson and then clicked the practice. I also locked the subsequent modules until the previous ones were done. Additionally, learners could control the lesson videos and replay or pause if necessary.

For more details on all the learner-centered design principles applied in this system, see the full report here.

User Testing and system evaluation

Participants

A total of 14 participants, consisting of friends, friends of friends, and DePaul University students. While not all of them plan to travel to Poland, all of them were beginners at Polish and had not studied the language previously at all.

Testing

Each participants was sent a prototype link via email, in which I briefly described the project topic and gave instructions to take the Pre-test, then follow the instructions on the system to complete the course, and then take the Post-test. The testing was 100% remote, done at the participants’ leisure. In order to affiliate pre and post test scores, I asked each participant to create a unique ID which they then inputted on both pre and post tests. I manually checked each submission and recorded all scores on an Excel spreadsheet. Each question was worth 1 point, and I graded question #8 as X/11, as there were 11 words to categorize (see full report link above to view pre and post test questions). In order to have an accurate comparison, Pre and Post test questions remained identical, but in a different order.

Statistical Outcomes

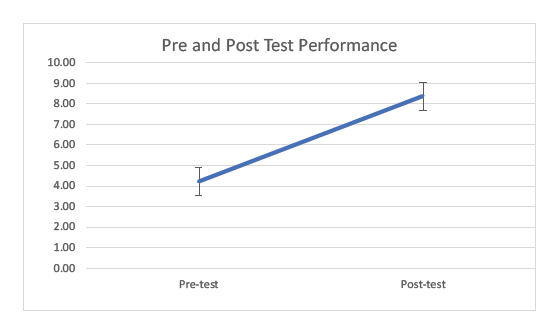

I conducted a paired t-test to compare the pre and post test scores of 14 participants. I found a significant difference (t = -7.40, p < .05), such that participants after completing the e-learning system scored higher (M = 8.36, SD = 1.25) than before completing the system (M = 4.23, SD =2.12).

t(13)= -7.40, p < .05

Effect Size:

Calculated Effect Size: 2.4

The calculated effect size indicates a very strong relationship between test results and completing the e-learning course.

Overall Evaluation & System Limitations

Overall, based on the statistical tests, the system was effective. The results were statistically significant, with a very large effect size. However, as always, there are some limitations including:

a small sample size, and testing within a similar age range, as I recruited friends, friends of friends, and other students, but did not test with any older users.

I wonder if I should have presented different questions for the post-test, with more of a “knowledge transfer” element involved, or designed the assessment questions in a different manner than multiple choice

I originally wanted to include audio within the assessments, but found it too difficult to implement with the tools available and time restriction. That might be something worth considering in further developments, as hearing and speaking the language is such a big part of learning it

Finally, there is always the factor of uncertainty in results when conducting an unmoderated remote test, as you cannot see the participants and must trust that they’ve followed the system instructions, and not looked up answers etc.

Further Research & Development

In addition to trying out use of audio clips in assessment, in future iterations, I might want to research other methods of conducting lessons utilizing the multimedia principle and images more, despite the fact that they would probably be more representational than anything else. Additionally, I would recommend external links for the pre and post tests, or an addition Axure page built in, stopping participants from moving onto the lessons if they had not yet taken the pre-test. I had a few participants miss the pre-test because it was an embedded Google form and didn’t load immediately, thus making their post-test scores unusable. I was trying to make the system uniform and streamlined, but in this case it created more trouble than cohesiveness. I might also consider comparing groups of users, as unintentionally, some of my participants were fluent in a different language already, and I wonder if that didn’t increase scores due to similarities they may have noticed between their language and Polish.

Finally, a mobile version of the system would be a more viable option for people to learn as they travel- the desktop version is fine if they are learning before their trip, but a mobile e-learning system would be a more convenient and useful option for the user.